- AI & the Web

- Posts

- 2024-05 edition of AI & the web

2024-05 edition of AI & the web

Jason Mayes on Web AI, Chrome & Edge with native AI, spectacular new transformers.js models

tl;dr

Jason Mayes speaks on Web AI | Google Chrome & Microsoft Edge get native AI integration | Xenova stuns again with Phi-3 & Moondream running in transformer.js / ONNX

Table of Contents

AI conversations

Jason Mayes on Web AI

Jason is Web AI lead at Google and author of the successful WebML newsletter. I spoke with Jason over LinkedIn about Google I/O, the latest developments in Web AI and Visualblocks.

Jan: Jason, congratulations on a very successful Google I/O conference. We are very happy you could spare some time to talk to us after probably some very busy weeks! Let's start off with a few words about yourself and how you got into AI in the first place.

Jason: So, I define myself as a creative engineer - that is someone who can merge the creative and technical worlds together so that they can Innovate to create world firsts across industries - knowing just enough across a wide range of areas to know how to combine them to solve real problems.

Currently I lead Web AI at Google globally. This is where we run machine learning models entirely client side on the web browser to gain privacy, low latency, and even cost savings vs Cloud AI.

How did I start in AI? Well it started back in 2015, just before TensorFlow was announced publicly. I was tasked on a project for a client whereby I needed to recognise different types of soda cans to trigger a creative experience.

After much investigation, traditional computer vision techniques at the time were just not robust enough to recognise those cans accurately enough. So I turned to speaking with the leaders of the TensorFlow team to see if I could repurpose an image classification model that they had made.

Turns out I could, but I was shocked how hard it was to retrain that model back then (there were no courses or fancy low code cloud systems back then and even the engineers on the TensorFlow team had issues running some of the scripts they had reliably), so I took it upon myself to take my learnings and create my own simpler cloud based system that given a video of some object, it would take that video, train a custom image classification model and then give you an easy to use JavaScript API to which you could send an image and it would decide if that original object was in the image or not.

That world first cloud system I made went on to become Google Cloud AutoML vision! That was my start into the world of AI. I love to Innovate, inspire, and then simplify processes so they can be useful to all.

Jan: Tensorflow.js (tfjs), the JavaScript version of the Tensorflow ML framework you mentioned, is one of the earliest and most popular ML frameworks for the web with roughly 1.1M monthly downloads on npm. Can you tell us a bit about how it started and what's the current state of tfjs?

Jason: So TensorFlowJS started life in 2016 as DeepLearn.js, born from the research arm of Google. It was at this point folk realized that it was more useful to industry so evolved into the TensorFlow.js we know today. I joined the team in 2019 to educate engineers on how they can leverage it in real world applications, taking its linear growth exponential throughout 2020 onwards. During this time other research teams also contributed to the Web AI space like MediaPipe Web team and in the last two years alone TensorFlowJS and MediaPipe models and libraries have had over 1.2 billion downloads in that time! Web AI is exploding in usage currently given new support for WebGPU that is allowing is to even run Gen AI in the browser entirely client side at incredible speeds if you have a GPU to leverage.

Jan: WebGPU is indeed exciting and I'll get back to that in a minute. Let's talk a bit about Mediapipe, Google's other ML framework for the web that also supports generative AI. Is mediapipe the successor to tfjs or what is the distinction?

Jason: No they are two different things. TensorFlowJS is a machine learning library with all the building blocks you need to make any model you dream up. Like a bunch of Legos. MediaPipe is a set of cutting edge models you can use to perform certain tasks like body segmentation for example. However it uses it's own runtime to execute instead of TensorFlowJS runtime but also leverages tech like WebGPU and WebAssembly

Jan: Ok gotcha, so MediaPipe is more of a high-level library in comparison to tfjs. What are your current plans for Tensorflow and Mediapipe?

Jason: Continued teaching / raising awareness of both.

Jan: Let's talk about the new built-in AI in Chrome that was announced at Google I/O. Can you tell us a bit about it?

Jason: So we are exploring how developers can benefit by having popular models downloaded once and accelerated natively by the browser. Experimental right now so we welcome feedback but look forward to see how this evolved to provide web Devs with stable apis they can use for AI that work across domains etc potentially.

Jan: What is the relation to the new Web NN API? Are you working together with W3C on this or is it a separate experimental API?

Jason: WebNN all major browsers contributing to longer term but not fully baked yet. Maybe some of these explorations could make it into such a standard one day. To be determined.

Jan: What performance speedup do you expect of a native integration in comparison to running a model with "plain" WebGPU e.g. via MediaPipe?

Jason: Unknown right now.

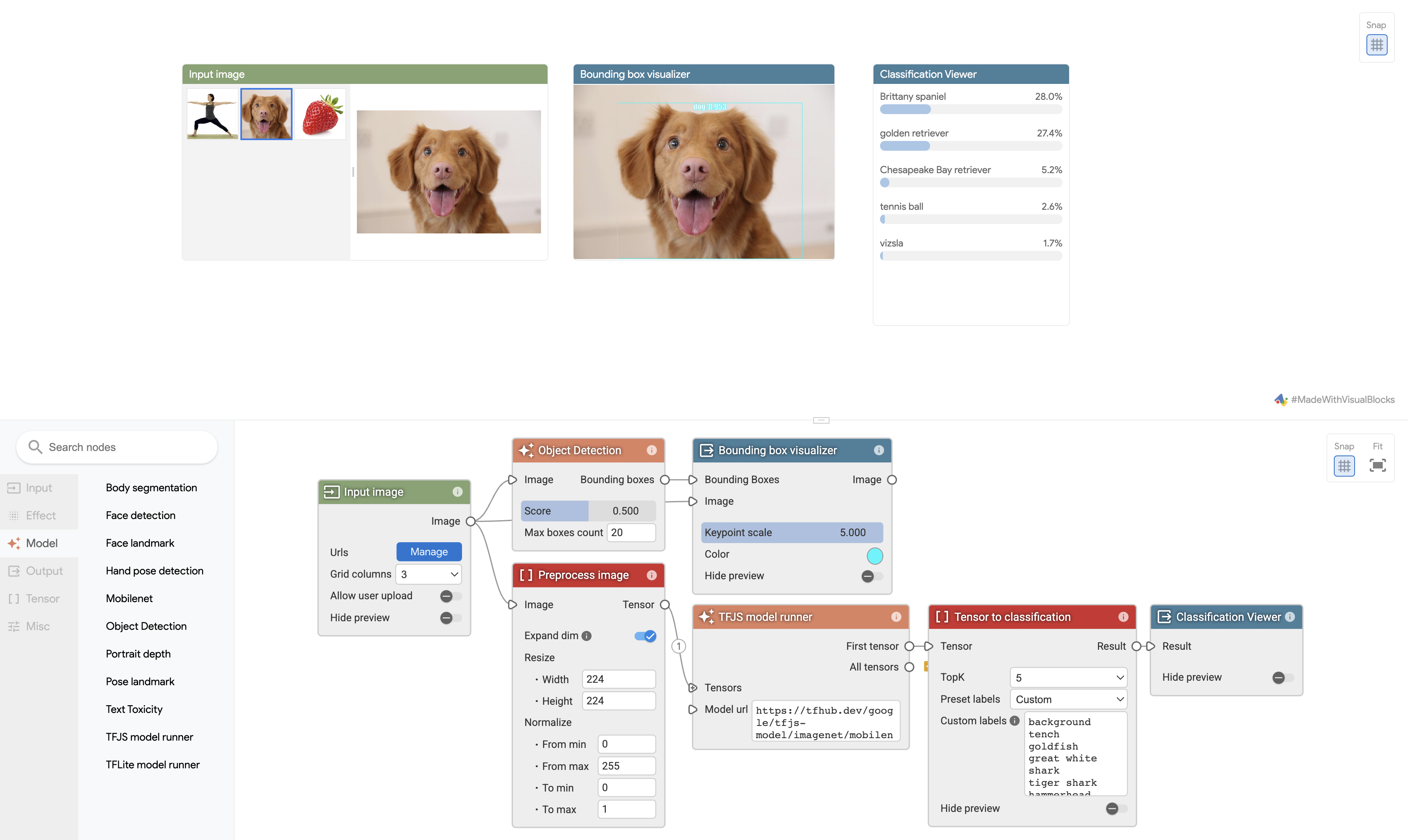

Jan: Understood, still hot of the press. Looking forward to see how this develops and will keep an eye on it! At Google I/O you also presented Visual Blocks, a visual programming framework to create ML pipelines, that you and your team have been working on. I tried it out myself and created a pipeline that would connect my web cam and create a bounding box around my bode and detect my face position in real-time. Quite cool! What's next on your agenda for Visual Blocks?

Jason: Now that we have enabled custom nodes, we look forward to seeing what you all make and share so the repository of nodes you can use in seconds expands for common reusable things! Also look forward to seeing how companies use VB for their own internal only pipeline too for experimentation and innovation with AI. Longer term we would like to investigate exporting pipelines in an easier manner so folk can then treat it like a "black box" to use on production website without the Visual Blocks user interface showing etc to integrate with their own custom user interface instead.

Jan: One last question: What do you think will be the big trends in AI and especially Web AI in the next few years?

Jason: I think robotics will have its own moment similar to how diffusion models and LLMs took off for images and text. For Web AI I believe with the growing awareness of privacy and cost of using cloud based systems we shall be a big part of "the great shrinking" of models. I expect we will see more research into smaller LLMs that are highly efficient and adept at niche tasks that people can download and use on device via Web AI. Consumers of AI will grow to to have certain "smarts" on popular websites and these more niche models would provide those abilities at scale while maintaining low latency, lower costs to the company, and privacy to the user - imagine you go to a legal website to summarize a contract you just received in a way you could then understand for example, or maybe you have a chrome extension that works on any website you visit to explain technical terms in the moment, without sending that block of text to server to get an answer (maybe you are on a logged in site that's private content) etc.

TLDR: Smaller, faster, more accurate models for common domains across the board that can run on a single GPU.

I therefore also predict companies like Intel, NVIDIA, AMD, Arm etc will look into shared GPU / CPU RAM architectures too so if you have 32GB CPU RAM, that same RAM could be used by the GPU too, further improving the number of models you can run on your device as AI becomes as prevalent as the Web is today.

Jan: Jason, thank you so much for your time!

Jason: Welcome.

Latest developments

Google I/O

News about Web AI in May were dominated by Googles annual conference Google I/O with tons of interesting releases. Check out all AI & Web related sessions here:

Some of the highlights:

Chrome receives built-in Web AI

Chrome will receive a built-in Web AI that web developers can use directly via a Web API. No need for an external framework. It is currently in a closed, experimental early preview that developers can register for. Read the full story here:

Web AI

Probably the talk most related to the topic of this newsletter was Jason's talk on Web AI at Google. Check out the full session here:

One of the cool things presented was Visual Blocks, a visual programming framework to compose ML models. Highly recommended to play around with it:

WASM & WebGPU improvements

Google is making giant steps in further improving the performance and feasibility of AI in the Web with loads of improvements to WASM and WebGPU support in Chrome. Check out the session:

Some of the highlights:

Relaxed SIMD resulting in 1.5-3x speedup

FP16 support

Memory64 shipping later this year

Packed Integer Dot Products

Subgroups (I know Christopher is very keen on this one)

Cooperative Matrix Multiply

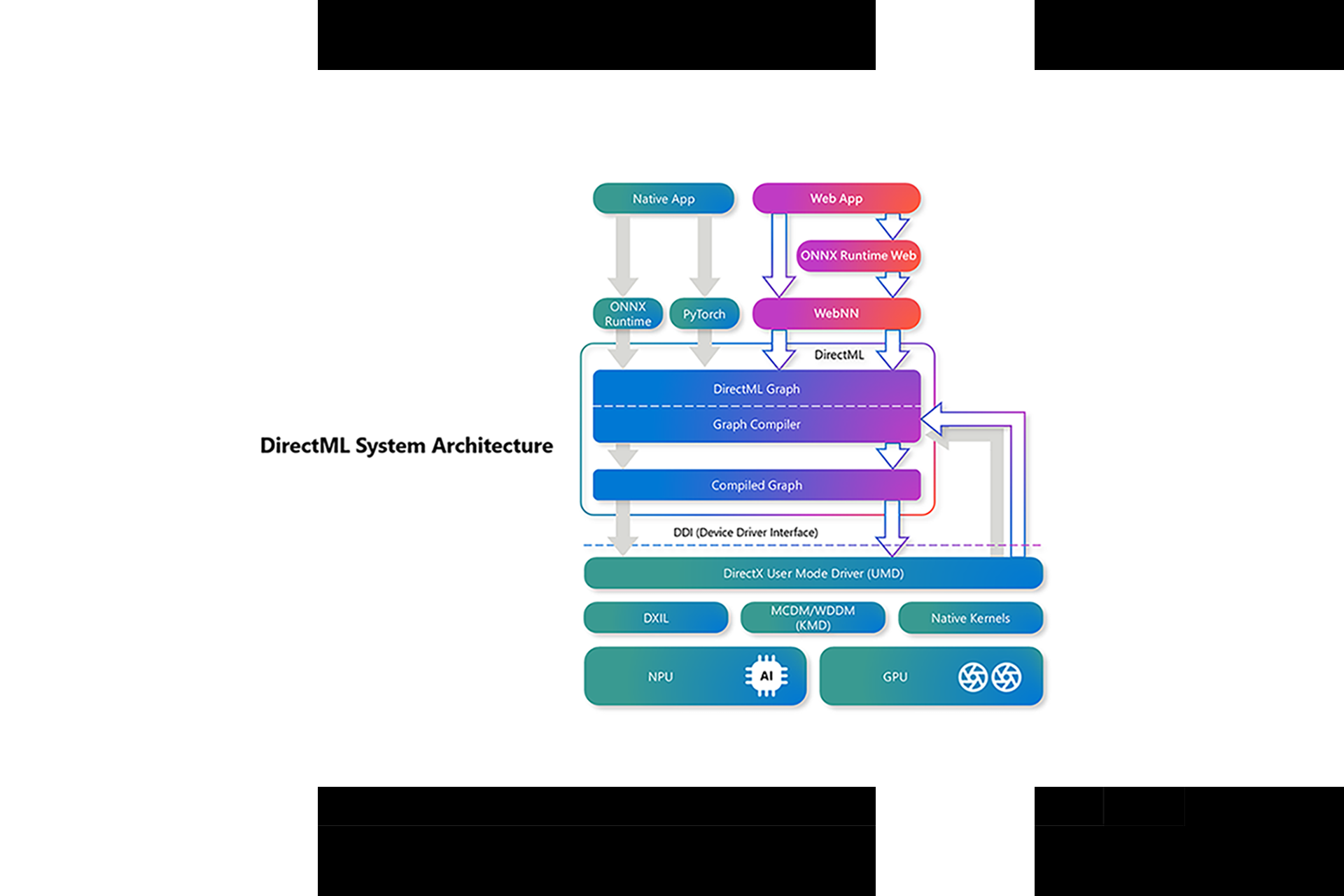

Microsoft announces WebNN API in Edge

Just a few days after Google announces built-in AI into Chrome, Microsoft follows suit and presents a WebNN integration into Edge. While it is not yet clear what the Chrome built-in AI Web API looks like, Microsoft implements the novel W3C Web Neural Network API.

Read the full story here:

If you are interested in learning more about WebNN, check out this link:

Showcases

Transformers.js & ONNX

Xenova impresses again with spectacular model demos.

Phi-3

Number 1 is a WebGPU optimised version of Phi-3 in collaboration with the ONNX team. Running at a spectacular ~20 tok/sec on my MacBook M1. Check out the demo here:

And read the background story here:

Moondream

Imho even more spectacular than Phi-3 is the release of Moondream, a vision language model running right in the browser. upload some image and ask questions about it, try it out here:

Upcoming conferences

On our own behalf

Are you working on a cool project with AI in the browser that you’d like to share? I’d be especially interested in art projects using AI in the browser. Send us an email to [email protected].